Machine Learning is a current application of Artificial Intelligence based around the idea that we should just be able to give machines access to data and let them learn for themselves. What are the implications for pedestrian simulation now that there is a veritable flood of data becoming available to it?

The first release of release Oasys MassMotion pedestrian simulation software was a response to the specific needs of the engineering team working on what has now become the New York Fulton Centre and subway interchange. They had a huge infrastructure project on their hands, with no way of testing their design concept. The MassMotion team developed algorithms that could simulate people moving through 3D space. Impressive as it was, it was developed in what was, relatively speaking a data drought, dependent on manual sources and inputs.

Since then, Oasys MassMotion software has been under continuous development and is widely used in rail, air and sports hubs as well as complex public spaces. It is now generally accepted that crowd simulation has a key role to pay in any design process where there are large numbers of people and/or specific performance criteria to cater for. It is also accepted that MassMotion’s pedestrian simulation is about as near to real human behaviour as it is possible – currently – to get.

It uses relatively small amounts of input data to drive an iterative cause and effect model which then generates large amounts of predictive data. Now the data floodgates are set to open, we need to learn how to assimilate all the information.

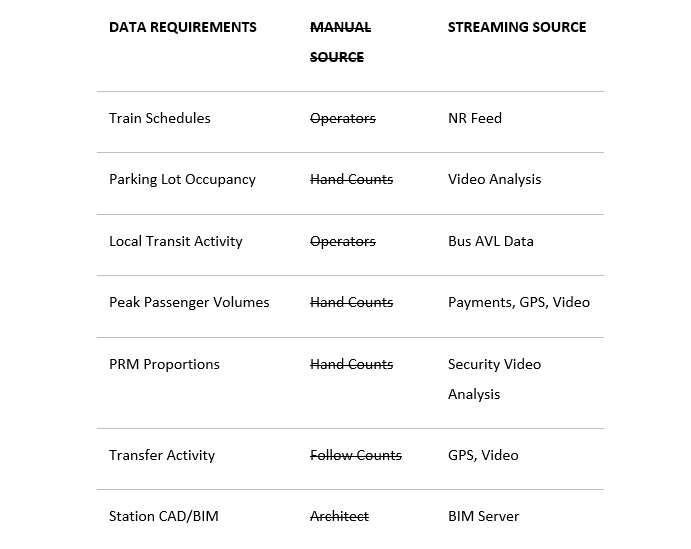

Data that was typically collected manually is now becoming available from streaming sources:

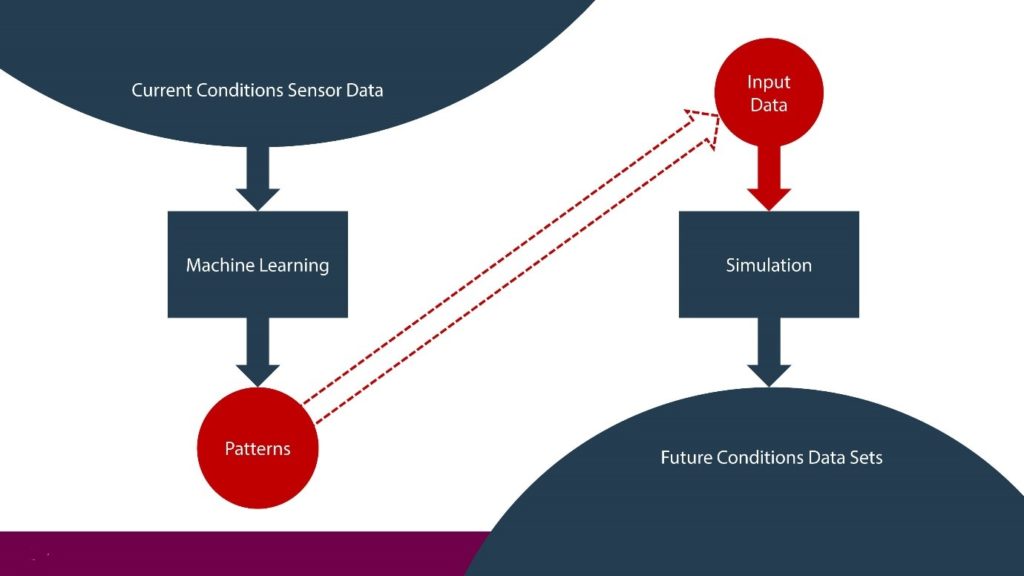

The immediate benefit should be using these large amounts of data to generate models that can turn new input data into meaningful outputs using techniques including convolutional neural networks, clustering, and regression analyses. These models will be statistically reliable and verifiable. For example: In this situation will a person tend to choose the right or left door?

But there are limits… machine learning models only provide meaningful answers when new input data is similar to the data used to train the models. These models cannot generate datasets that describe novel conditions.

Ubiquitous and high-quality data collection isn’t here yet, but it will happen sooner rather than later. We are going to need new tools and techniques to handle the new data flood and now is the time to be resolving issues around ownership and interoperability.

For now, we need to look critically at workflows and identify and focus on areas of poor data integrity. Because simulations are so dependent on such a small amount of input data, it is critical that this data is a good as it can be. In the short term, machine learning and sensor data analytics offer the promise of significant improvement to simulation input data.

Big Data Analytics will improve our understanding of the now and help us to simulate the future. Crowd movement and management will be improved: the buildings and cities we live in will be better suited to the needs of our growing population.