Software Used on this Project

Project Overview

In response to ageing infrastructure and the desire to improve passenger experience, the MTA launched the New York City Transit (NYCT) Subway Enhanced Station Initiative (ESI) Program, which aims to improve the user experience of Subway riders by refurbishing stations, introducing modern enhancements, and performing vital repairs. Arup’s People Movement team utilised 3D immersive technology and a Virtual Reality (VR) environment to create a ground-breaking, user-centric perspective for design review for the Grand Central Subway Station. The 3D and VR environments were created using an Oasys MassMotion model which enabled the designers to step into the station, visualise the space they were designing and experience the acoustics, lighting and signage systems. The main purpose of the ESI program is to improve the user experience for the 400,000 passengers who pass through Grand Central Subway Station daily.

MassMotion agent point of view (POV) – barriers

How Oasys proved invaluable

Oasys MassMotion is a pedestrian and crowd simulation software that aims to capture and simulate the ways in which people behave, move, and interact with one another, as well as the space that they are present in. MassMotion can not only be used to simulate human movement, but also analyse it. It can be used for tasks involving modelling where people linger the most, what areas of a model are the busiest over time, and what areas of a model have the potential for improvement to increase pedestrian flow and safety.

Arup used MassMotion to assess how the Grand Central-42nd St Station’s mezzanine level may perform in the future when improvements to the station are completed. These improvements included relieving congestion highlighted by the MassMotion pedestrian model, but also using VR to represent how the finished station improvements would impact the impeded sightlines, obstructions to movement and improved wayfinding in the existing station. Improved lighting, wayfinding, architectural finishes and interactive dashboards were all aimed at making the station more pleasant for passengers. To achieve the subjective design aims of improving the overall experience for station users, many disciplines collaborated and supported one another with the aim to come together to achieve this goal. Using VR to bring together these different aspects, as well as inserting the crowd movements from MassMotion to assess exactly how the intersecting disciplines work affected and supported one another on the project is discussed within this case study.

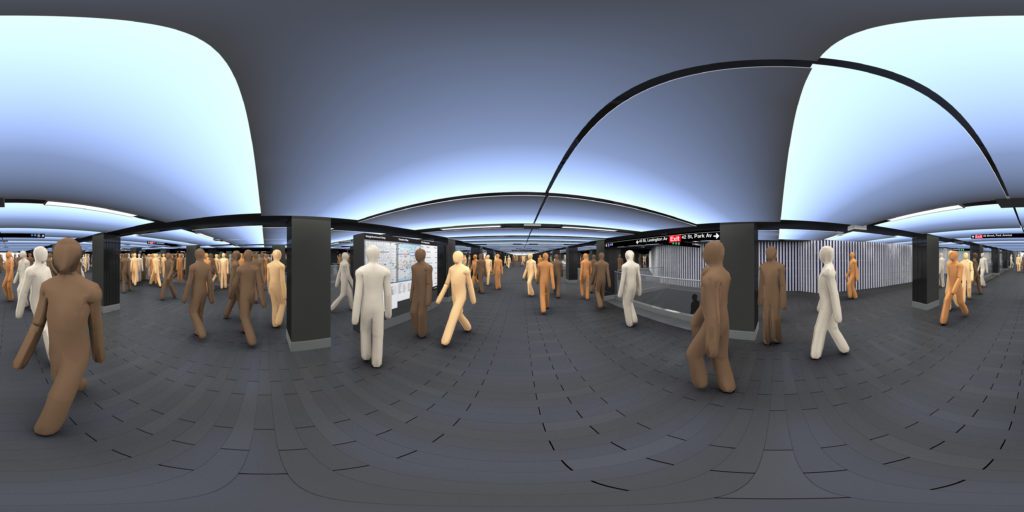

MassMotion agent POV in VR

To create the simulation, there were a variety of elements that were brought together into collaboration. First, a geometric model of the mezzanine was created using Rhino, which set the stage for the incorporation of the other elements. A material palette was then created using Rhino Renderings from the Architectural team, which gave the model specific detail and contributed to the realism of the VR experience. Next, signage and specific messaging was created for each sign using Adobe Illustrator, which allowed the team to create the signage plan in the virtual model that was proposed by the Wayfinding designers working on the project. Agent positions and movement were then established in MassMotion, which brought the space to life and allowed for an accurate simulation of the volume of passengers in the busy station and allowed the team to model the interactions of these passengers in the space. MassMotion was the key element in the equation, as many of the other applications and steps were built from making the MassMotion model more visually appealing and immersive for the viewer. Lighting design was incorporated into the model using Dialux, which allowed for accurate representation of the lighting designer’s plan for the station as well. These design elements were then combined in 3D Studio Max, which allowed for the creation of the virtual experience and accurate representation of the current design elements from each discipline. Lastly, the acoustic soundscape was added using Max SP, which resulted in the full VR experience and a special experience for viewers within Arup’s SoundLab, where a fully ambisonic (360 degree) acoustic experience can be replicated to transport the viewer into the station of the future.

Photo realism design for the Grand Central Station subway

Arup placed 3D signage into this virtual space allowing the Wayfinding designers to see and test how the sign program worked for people in the virtual space. The team adjusted the Wayfinding design as a result, ensuring that the final design would work for station users despite the spatial complexity of the busy station.

The designers used photo realism, VR, and sophisticated visual and aural representations of a person’s experience within the finished station before any construction began. This brought to life the human perspective of design, the intersection of many different disciplines work and the impact of the proposed station renovation as part of the planning and consultation process.

The application of 3D immersive environment unlocked a unique user perspective of the proposed station and its wayfinding plan. This provided a holistic view of a digital space that integrates architectural form alongside other consulting practices (Transport Planning, Acoustics, Lighting, Wayfinding, etc.) into a cohesive experience for project designers and stakeholders.

MassMotion flythrough of the Grand Central Subway Station model

When designing a space, it is often difficult to get a sense of how it will look and feel when completed, especially if this space will be busy and well-utilised by people. Expressing the real feeling of the space, Arup felt, is incomplete without the people. Architectural models are useful in showing the dimensions and physical characteristics of an area, however when people are added to the equation this brings a new layer to how a space will look and feel like, as well as how it will perform. Utilising MassMotion’s strengths in portraying realistic crowd flows within a realistic virtual environment was especially useful in this case.

MassMotion demonstrated design from a human perspective that is not regularly observed in most projects. The VR walkthrough of the station was from a MassMotion agent’s perspective, so the uniquely human perspective of design could be observed, rather than from 2D CAD plans or overview renderings.

Grand Central Virtual Reality Walkthrough

MassMotion provided a solution and the opportunity to enhance the project and its visualisation. The software was used to realistically show the movement of people, which allowed for a more complete picture of the new station, and thus allowed the model to be more useful to the design team. It also allowed Arup to demonstrate how busy the space will be after construction finishes, allowing for discipline design to reflect the natural crowd movements that were predicted for the future. In addition to the visual qualities of the model, Arup also utilised acoustic simulations using its SoundLab, to realistically add to the virtual experience of the finished station by adding another human sense to the overall experience. The acoustic recordings were recorded in the actual subway station in New York. The recording was taken by following the agent’s path in the virtual experience through the station itself. This allowed Arup to represent the acoustic soundscape of the space as you move through the station. This allowed for a fully immersive experience to deeply understand how the space may feel, look and sound.

We’d like to thank Senior Planner James Rimington, Senior Designer Anthony Cortez and the People Movement team at Arup for sharing this work with us.

Find out more about Oasys MassMotion here.